Multi-Layer Perceptrons (MLP) and Backpropagation algorithm

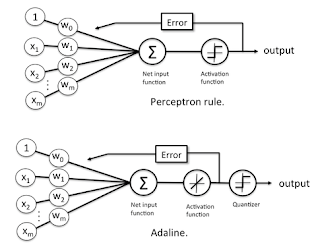

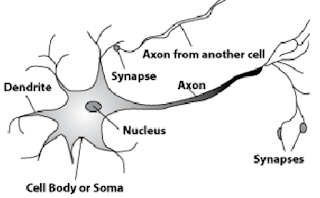

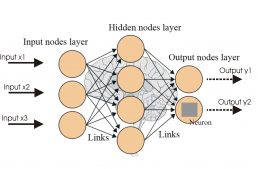

Single Layer Perceptron – This is the simplest feedforward neural network and does not contain any hidden layer. Minsky and Papert mathematically analyzed Perceptron and demonstrated that single-layer networks are not capable of solving problems that are not linearly separable. As they did not believe in the possibility of constructing a training method for networks with more than one layer, they concluded that neural networks would always be susceptible to this limitation. The multilayer perceptron (MLP) is a neural network similar to perceptron, but with more than one layer of neurons in direct power. Such a network is composed of layers of neurons connected to each other by synapses with weights. Learning in this type of network is usually done through the back-propagation error algorithm, but there are other algorithms for this purpose, such as Rprop. However, the development of the backpropagation training algorithm has shown that it is possible to...